Limitations of current automatic specification generation tools

Have you ever wondered how automatic specification generation can streamline API security scanning? By significantly reducing the manual effort involved in creating and maintaining API specifications, automated tools offer clear benefits in terms of scan accuracy and setup time.

Yet, many existing tools on the market have notable limitations. Understanding these constraints is essential to fully appreciate how Escape's approach to automated spec generation stands out.

Code-first tools

Code-first tools, like Swagger Codegen and SpringFox, generate OpenAPI specifications directly from code annotations and structures.

These tools significantly reduce the manual labor involved in creating API documentation by leveraging annotations within the codebase.

However, their heavy reliance on these annotations introduces several limitations:

- Annotation Dependence: The quality and completeness of the generated specifications are directly tied to the accuracy and comprehensiveness of the annotations. If developers omit, misuse, or inconsistently apply annotations, the resulting specifications can be incomplete or inaccurate, leading to potential misunderstandings and integration issues.

- Limited Behavioral Capturing: Code-first tools often struggle to capture complex behaviors, constraints, and interdependencies between API components that are not explicitly annotated. This results in superficial documentation that fails to convey the full scope of the API’s functionality and limitations.

- Maintenance Challenges: Keeping the annotations up-to-date with evolving code can be challenging. As the codebase changes, developers must continually update the annotations to ensure the specifications remain accurate, which can be time-consuming and error-prone.

Spec-first tools

On the other hand, spec-first tools, like Stoplight and OpenAPI Generator, take a specification-first approach, allowing developers to design the API specification upfront and then generate code.

While this method provides a clear and structured way to define API contracts before implementation, it also presents several challenges:

- Upfront Investment: Creating detailed specifications before any code is written requires significant upfront investment. This can slow down the initial development process, as developers must thoroughly plan and document the API’s design before starting to write code.

- Alignment Maintenance: Ensuring that the evolving codebase remains aligned with the original specification is a persistent challenge. As the code changes, the specification must be manually updated to reflect these changes, requiring continuous effort to maintain synchronization between the two.

- Iterative Development: In agile and iterative development environments, the spec-first approach can be cumbersome. Rapid changes and frequent iterations necessitate constant updates to the specifications, which can become a bottleneck in the development process.

Machine Learning-Based Tools

Finally, both code-first and spec-first tools don’t provide a solution for older code bases.

Recent advancements in machine learning, particularly in Natural Language Processing (NLP), have led to the development of tools that generate API specifications from human-readable documentation

Two famous tools that follow this approach are D2Spec and SpecCrawler.

However, both these tools generate OpenAPI specifications from human-readable documentation, and the bulk of their work is to transform this human-readable information into properly typed machine-readable documentation.

This paper on how to use GPT-3 to automatically create RESTful Service descriptions also presents a similar approach.

To our knowledge only Respector provides a way to generate OAS from analysis of code (both with static analysis and symbolic program analysis).

However, this tool is limited to Java-based REST Frameworks, namely Spring Boot and Jersey. This restricts its applicability to a broader range of technologies used in diverse development environments.

In this context, providing software for language and framework OpenAPI specification generation through static analysis would be a major breakthrough.

How Escape's approach stands out

Generating an OpenAPI specification generation through traditional static analysis tools can be challenging due to their reliance on predefined rules and patterns. Because of these limitations, these tools struggle with the complexity and variability of real-world codebases, often missing nuanced context and dependencies. These limitations are even more salient if you are trying to build a framework and language-agnostic software.

Luckily, Large Language Models (LLMs) have recently become extremely good at analyzing and generating code. Moreover, they show great performance across a wide variety of code languages, frameworks, and coding styles, which is exactly what we want for framework and language-agnostic OAS generation software. Finally, LLMs can also leverage information in code comments, which the traditional static analysis approach cannot do.

In our current approach, we focused on two things:

- Semantic Analysis: We identify key code fragments using custom rules, reducing the data sent to the LLM while improving prompt quality. We'll soon publish an in-depth article on how we applied custom rules (for example, using a specific Semgrep pattern).

- Specification Generation: Each identified fragment is processed individually by the LLM to generate OAS methods. Contextualization ensures accurate results by resolving dependencies and references within the code.

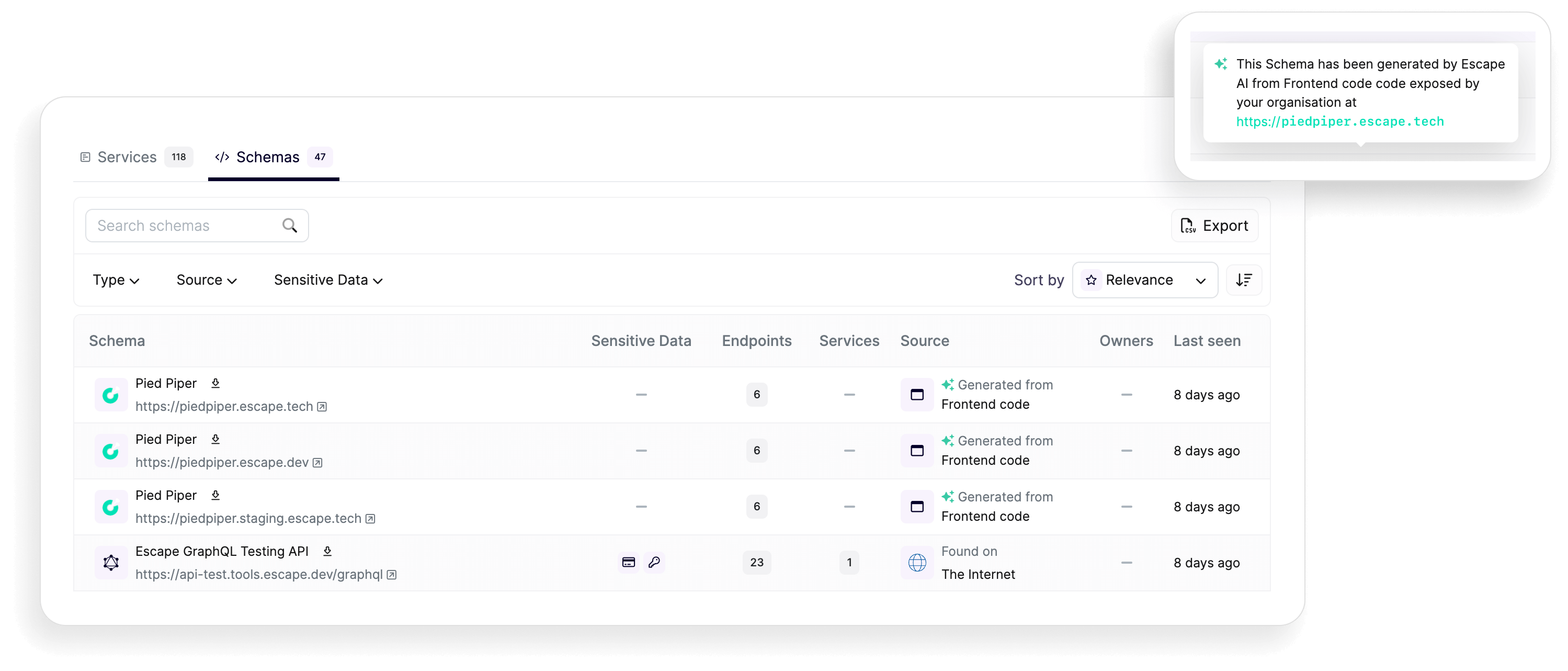

This approach helps Escape not only to generate API schemas but also continuously monitor for and detect any changes or versions in the API schema over time. This capability allows teams to track how their APIs evolve and ensure that all changes are documented and understood, reducing the risk of inconsistencies or integration issues.

Benefits

- Efficiency: Focus on relevant code parts reduces processing time and costs compared to traditional methods.

- Accuracy: Improved detection of endpoints and parameters enhances the quality of generated specifications without revealing proprietary algorithms.

We employed an iterative approach to measure the success of our backend and frontend static analysis for API specification generation. We ran our specification generation tool on a dataset comprising backend repositories and frontend assets for which we had access to ground-truth API specifications. This dataset included both proprietary and open-source data.

We iteratively refined our static analysis techniques by comparing the generated API specifications with the known ground-truth. This process allowed us to identify and correct inaccuracies, ensuring that our tool could generate accurate and comprehensive API specifications for both backend and frontend codebases.

Conclusion

Current automatic specification generation tools are helpful for creating and maintaining API documentation, but they have significant limitations. To overcome these challenges, we were looking for a better approach.

Escape's innovative approach, powered by Large Language Models and advanced semantic analysis, stands out by focusing on critical code fragments and using custom rules. This method ensures accurate specification generation across various frameworks and programming languages, overcoming the shortcomings of traditional static analysis tools. It not only simplifies API documentation processes but also supports agile development and continuous security testing, representing a significant step forward in automating API specification tasks.

Scale your security with a modern DAST like Escape

Test for complex business logic vulnerabilities without uploading API specifications directly within your modern stack

Get a demo with our product expert💡 Want to learn more? Discover the following articles: